什么是pstore?最初,pstore被设计用于在系统发生oops或panic时,自动保存内核log buffer中的日志。不过,在当前的内核版本中,其功能已大大扩展,支持保存控制台(console)日志、ftrace消息和用户空间日志。它还支持将这些消息保存到不同的存储设备中,例如内存、块设备或MTD设备。

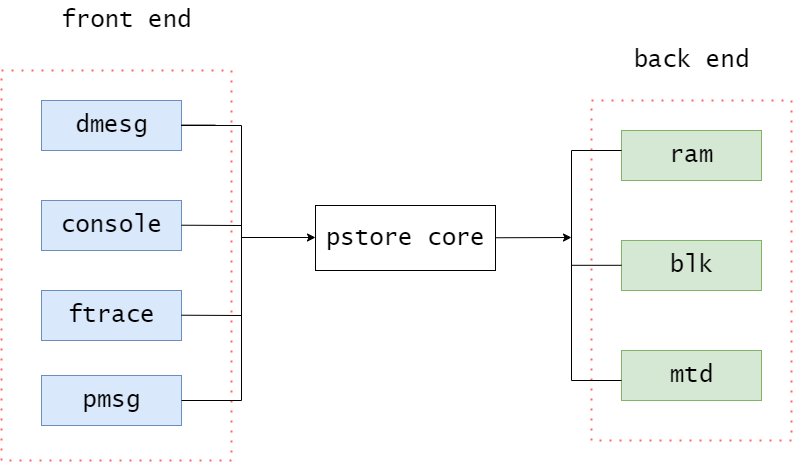

为了提高灵活性和可扩展性,pstore采用了模块化的前端/后端架构。像dmesg、console等为pstore提供数据源的模块被称为“前端”,而内存设备、块设备等用于实际存储数据的模块则被称为“后端”。pstore core作为核心模块,为它们提供统一的注册和管理接口。

通过这种设计,实现了前端与后端的解耦。因此,如果某个新模块需要利用pstore来保存信息,可以方便地注册为一个新的前端;同样,如果需要将数据保存到一种新的存储设备上,也可以通过添加一个新的后端设备来实现。

此外,pstore还实现了一套专有的pstore文件系统,用于查询和操作上一次系统重启时已保存的数据。当挂载该文件系统时,保存在后端(backend)中的数据会被读取出来,并以文件的形式在挂载点下呈现。

pstore工作原理

pstore的主要源文件位于内核源码树的 fs/pstore/ 目录下:

fs/pstore/

├── ftrace.c # ftrace 前端的实现

├── inode.c # pstore 文件系统的注册与操作

├── internal.h

├── Kconfig

├── Makefile

├── platform.c # pstore 前后端功能的核心

├── pmsg.c # pmsg 前端的实现

├── ram.c # pstore/ram 后端的实现,dram空间分配与管理

├── ram_core.c # pstore/ram 后端的实现,dram的读写操作

文件创建

pstore文件系统通常挂载在 /sys/fs/pstore 目录下:

# ls /sys/fs/pstore

console-ramoops-0 dmesg-ramoops-0

- 控制台日志 位于

pstore 目录下的 console-ramoops-* 文件中。由于采用console机制,该文件中的日志信息也受到printk级别控制,不一定是完整的。

- oops/panic日志 位于

pstore 目录下的 dmesg-ramoops-* 文件中。根据缓冲区大小,可能会有多个文件,编号从0开始。

- 函数调用序列日志 位于

pstore 目录下的 ftrace-ramoops-* 文件中。

文件创建的关键函数在 inode.c 的 pstore_mkfile 里:

/*

* Make a regular file in the root directory of our file system.

* Load it up with "size" bytes of data from "buf".

* Set the mtime & ctime to the date that this record was originally stored.

*/

int pstore_mkfile(enum pstore_type_id type, char *psname, u64 id, int count,

char *data, bool compressed, size_t size,

struct timespec time, struct pstore_info *psi)

{

........................

rc = -ENOMEM;

inode = pstore_get_inode(pstore_sb);

............................

switch (type) {

case PSTORE_TYPE_DMESG:

scnprintf(name, sizeof(name), "dmesg-%s-%lld%s",

psname, id, compressed ? ".enc.z" : "");

break;

case PSTORE_TYPE_CONSOLE:

scnprintf(name, sizeof(name), "console-%s-%lld", psname, id);

break;

case PSTORE_TYPE_FTRACE:

scnprintf(name, sizeof(name), "ftrace-%s-%lld", psname, id);

break;

case PSTORE_TYPE_MCE:

scnprintf(name, sizeof(name), "mce-%s-%lld", psname, id);

break;

case PSTORE_TYPE_PPC_RTAS:

scnprintf(name, sizeof(name), "rtas-%s-%lld", psname, id);

break;

case PSTORE_TYPE_PPC_OF:

scnprintf(name, sizeof(name), "powerpc-ofw-%s-%lld",

psname, id);

break;

case PSTORE_TYPE_PPC_COMMON:

scnprintf(name, sizeof(name), "powerpc-common-%s-%lld",

psname, id);

break;

case PSTORE_TYPE_PMSG:

scnprintf(name, sizeof(name), "pmsg-%s-%lld", psname, id);

break;

case PSTORE_TYPE_PPC_OPAL:

sprintf(name, "powerpc-opal-%s-%lld", psname, id);

break;

case PSTORE_TYPE_UNKNOWN:

scnprintf(name, sizeof(name), "unknown-%s-%lld", psname, id);

break;

default:

scnprintf(name, sizeof(name), "type%d-%s-%lld",

type, psname, id);

break;

}

....................

dentry = d_alloc_name(root, name);

.......................

d_add(dentry, inode);

................

}

pstore_mkfile 函数根据不同的 type(日志类型),使用 snprintf 函数生成文件名 name。生成的文件名格式通常为 <type>-<psname>-<id>,其中 psname 是后端名称(如ramoops),id 是一个唯一标识符。接着,它使用 d_alloc_name 函数在根目录下创建一个目录项 dentry,最后通过 d_add 函数将目录项与索引节点关联起来,从而在文件系统中创建出可见的文件。

pstore_register

ramoops这样的后端模块负责将消息(message)写入到特定的RAM区域,而platform模块(platform.c)则负责从RAM读取数据并存入 /sys/fs/pstore。我们先从核心机制代码 platform.c 看起。

后端设备需要通过 pstore_register 函数来注册自身:

/*

* platform specific persistent storage driver registers with

* us here. If pstore is already mounted, call the platform

* read function right away to populate the file system. If not

* then the pstore mount code will call us later to fill out

* the file system.

*/

int pstore_register(struct pstore_info *psi)

{

struct module *owner = psi->owner;

if (backend && strcmp(backend, psi->name))

return -EPERM;

spin_lock(&pstore_lock);

if (psinfo) {

spin_unlock(&pstore_lock);

return -EBUSY;

}

if (!psi->write)

psi->write = pstore_write_compat;

if (!psi->write_buf_user)

psi->write_buf_user = pstore_write_buf_user_compat;

psinfo = psi;

mutex_init(&psinfo->read_mutex);

spin_unlock(&pstore_lock);

...

/*

* Update the module parameter backend, so it is visible

* through /sys/module/pstore/parameters/backend

*/

backend = psi->name;

module_put(owner);

backend 变量的判断确保一次只能注册一个活动的后端,并会记录下全局的 psinfo 指针。让我们看一下关键的数据结构 pstore_info:

struct pstore_info {

struct module *owner;

char *name;

spinlock_t buf_lock; /* serialize access to 'buf' */

char *buf;

size_t bufsize;

struct mutex read_mutex; /* serialize open/read/close */

int flags;

int (*open)(struct pstore_info *psi);

int (*close)(struct pstore_info *psi);

ssize_t (*read)(u64 *id, enum pstore_type_id *type,

int *count, struct timespec *time, char **buf,

bool *compressed, ssize_t *ecc_notice_size,

struct pstore_info *psi);

int (*write)(enum pstore_type_id type,

enum kmsg_dump_reason reason, u64 *id,

unsigned int part, int count, bool compressed,

size_t size, struct pstore_info *psi);

int (*write_buf)(enum pstore_type_id type,

enum kmsg_dump_reason reason, u64 *id,

unsigned int part, const char *buf, bool compressed,

size_t size, struct pstore_info *psi);

int (*write_buf_user)(enum pstore_type_id type,

enum kmsg_dump_reason reason, u64 *id,

unsigned int part, const char __user *buf,

bool compressed, size_t size, struct pstore_info *psi);

int (*erase)(enum pstore_type_id type, u64 id,

int count, struct timespec time,

struct pstore_info *psi);

void *data;

};

其中的 name 字段就是后端的名称。*write 和 *write_buf_user 是回调函数指针,如果后端没有提供具体的实现,框架会提供默认的兼容函数(compat func),最终它们都会调用 *write_buf 函数。

if (!psi->write)

psi->write = pstore_write_compat;

if (!psi->write_buf_user)

psi->write_buf_user = pstore_write_buf_user_compat;

static int pstore_write_compat(enum pstore_type_id type,

enum kmsg_dump_reason reason,

u64 *id, unsigned int part, int count,

bool compressed, size_t size,

struct pstore_info *psi)

{

return psi->write_buf(type, reason, id, part, psinfo->buf, compressed,

size, psi);

}

static int pstore_write_buf_user_compat(enum pstore_type_id type,

enum kmsg_dump_reason reason,

u64 *id, unsigned int part,

const char __user *buf,

bool compressed, size_t size,

struct pstore_info *psi)

{

...

ret = psi->write_buf(type, reason, id, part, psinfo->buf,

...

}

继续看 pstore_register 的后续流程:

if (pstore_is_mounted())

pstore_get_records(0);

如果pstore文件系统已经挂载,那么会立即调用 pstore_get_records 来创建并填充文件:

/*

* Read all the records from the persistent store. Create

* files in our filesystem. Don‘t warn about -EEXIST errors

* when we are re-scanning the backing store looking to add new

* error records.

*/

void pstore_get_records(int quiet)

{

struct pstore_info *psi = psinfo; //tj: global psinfo

...

mutex_lock(&psi->read_mutex);

if (psi->open && psi->open(psi))

goto out;

while ((size = psi->read(&id, &type, &count, &time, &buf, &compressed,

&ecc_notice_size, psi)) > 0) {

if (compressed && (type == PSTORE_TYPE_DMESG)) {

if (big_oops_buf)

unzipped_len = pstore_decompress(buf,

big_oops_buf, size,

big_oops_buf_sz);

if (unzipped_len > 0) {

if (ecc_notice_size)

memcpy(big_oops_buf + unzipped_len,

buf + size, ecc_notice_size);

kfree(buf);

buf = big_oops_buf;

size = unzipped_len;

compressed = false;

} else {

pr_err("decompression failed;returned %d\n",

unzipped_len);

compressed = true;

}

}

rc = pstore_mkfile(type, psi->name, id, count, buf,

compressed, size + ecc_notice_size,

time, psi);

if (unzipped_len < 0) {

/* Free buffer other than big oops */

kfree(buf);

buf = NULL;

} else

unzipped_len = -1;

if (rc && (rc != -EEXIST || !quiet))

failed++;

}

if (psi->close)

psi->close(psi);

out:

mutex_unlock(&psi->read_mutex);

}

这个函数循环调用后端提供的 read 函数读取记录。如果需要(例如dmesg日志被压缩),会调用 pstore_decompress 解压,然后通过vfs接口 pstore_mkfile 在pstore文件系统中创建对应的文件。

注册流程的下一步是根据后端支持的标志(flags),分别注册不同类型的前端:

if (psi->flags & PSTORE_FLAGS_DMESG)

pstore_register_kmsg();

if (psi->flags & PSTORE_FLAGS_CONSOLE)

pstore_register_console();

if (psi->flags & PSTORE_FLAGS_FTRACE)

pstore_register_ftrace();

if (psi->flags & PSTORE_FLAGS_PMSG)

pstore_register_pmsg();

psi->flags 由后端决定。我们主要看 pstore_register_kmsg 和 pstore_register_console。

pstore panic log注册

static struct kmsg_dumper pstore_dumper = {

.dump = pstore_dump,

};

/*

* Register with kmsg_dump to save last part of console log on panic.

*/

static void pstore_register_kmsg(void)

{

kmsg_dump_register(&pstore_dumper);

}

pstore_dump 函数最终会调用后端注册的write函数(通过全局 psinfo 指针)。

/*

* callback from kmsg_dump. (s2,l2) has the most recently

* written bytes, older bytes are in (s1,l1). Save as much

* as we can from the end of the buffer.

*/

static void pstore_dump(struct kmsg_dumper *dumper,

enum kmsg_dump_reason reason)

{

...

ret = psinfo->write(PSTORE_TYPE_DMESG, reason, &id, part,

oopscount, compressed, total_len, psinfo);

kmsg_dump_register 是内核提供的一种注册日志转储器(dumper)的机制,它会在内核发生oops或panic时被调用。

/**

* kmsg_dump_register - register a kernel log dumper.

* @dumper: pointer to the kmsg_dumper structure

*

* Adds a kernel log dumper to the system. The dump callback in the

* structure will be called when the kernel oopses or panics and must be

* set. Returns zero on success and %-EINVAL or %-EBUSY otherwise.

*/

int kmsg_dump_register(struct kmsg_dumper *dumper)

{

unsigned long flags;

int err = -EBUSY;

/* The dump callback needs to be set */

if (!dumper->dump)

return -EINVAL;

spin_lock_irqsave(&dump_list_lock, flags);

/* Don‘t allow registering multiple times */

if (!dumper->registered) {

dumper->registered = 1;

list_add_tail_rcu(&dumper->list, &dump_list);

err = 0;

}

spin_unlock_irqrestore(&dump_list_lock, flags);

return err;

}

/**

* kmsg_dump - dump kernel log to kernel message dumpers.

* @reason: the reason (oops, panic etc) for dumping

*

* Call each of the registered dumper‘s dump() callback, which can

* retrieve the kmsg records with kmsg_dump_get_line() or

* kmsg_dump_get_buffer().

*/

void kmsg_dump(enum kmsg_dump_reason reason)

{

struct kmsg_dumper *dumper;

unsigned long flags;

if ((reason > KMSG_DUMP_OOPS) && !always_kmsg_dump)

return;

rcu_read_lock();

list_for_each_entry_rcu(dumper, &dump_list, list) {

if (dumper->max_reason && reason > dumper->max_reason)

continue;

/* initialize iterator with data about the stored records */

dumper->active = true;

raw_spin_lock_irqsave(&logbuf_lock, flags);

dumper->cur_seq = clear_seq;

dumper->cur_idx = clear_idx;

dumper->next_seq = log_next_seq;

dumper->next_idx = log_next_idx;

raw_spin_unlock_irqrestore(&logbuf_lock, flags);

/* invoke dumper which will iterate over records */

dumper->dump(dumper, reason);

/* reset iterator */

dumper->active = false;

}

rcu_read_unlock();

}

pstore console 注册

static struct console pstore_console = {

.name = "pstore",

.write = pstore_console_write,

.flags = CON_PRINTBUFFER | CON_ENABLED | CON_ANYTIME,

.index = -1,

};

static void pstore_register_console(void)

{

register_console(&pstore_console);

}

其 ->write 回调函数最终也会调用后端的write函数:

#ifdef CONFIG_PSTORE_CONSOLE

static void pstore_console_write(struct console *con, const char *s, unsigned c)

{

const char *e = s + c;

while (s < e) {

unsigned long flags;

u64 id;

if (c > psinfo->bufsize)

c = psinfo->bufsize;

if (oops_in_progress) {

if (!spin_trylock_irqsave(&psinfo->buf_lock, flags))

break;

} else {

spin_lock_irqsave(&psinfo->buf_lock, flags);

}

memcpy(psinfo->buf, s, c);

psinfo->write(PSTORE_TYPE_CONSOLE, 0, &id, 0, 0, 0, c, psinfo); // tj: here

spin_unlock_irqrestore(&psinfo->buf_lock, flags);

s += c;

c = e - s;

}

}

#endif

ramoops

接下来看看RAM后端的具体实现:ramoops。首先看它的探测(probe)函数:

static int ramoops_probe(struct platform_device *pdev)

{

struct device *dev = &pdev->dev;

struct ramoops_platform_data *pdata = dev->platform_data;

...

if (!pdata->mem_size || (!pdata->record_size && !pdata->console_size &&

!pdata->ftrace_size && !pdata->pmsg_size)) {

pr_err("The memory size and the record/console size must be "

"non-zero\n");

goto fail_out;

}

...

cxt->size = pdata->mem_size;

cxt->phys_addr = pdata->mem_address;

cxt->memtype = pdata->mem_type;

cxt->record_size = pdata->record_size;

cxt->console_size = pdata->console_size;

cxt->ftrace_size = pdata->ftrace_size;

cxt->pmsg_size = pdata->pmsg_size;

cxt->dump_oops = pdata->dump_oops;

cxt->ecc_info = pdata->ecc_info;

pdata 通常来源于 ramoops_register_dummy 函数(通过模块参数初始化):

static void ramoops_register_dummy(void)

{

...

pr_info("using module parameters\n");

dummy_data = kzalloc(sizeof(*dummy_data), GFP_KERNEL);

if (!dummy_data) {

pr_info("could not allocate pdata\n");

return;

}

dummy_data->mem_size = mem_size;

dummy_data->mem_address = mem_address;

dummy_data->mem_type = mem_type;

dummy_data->record_size = record_size;

dummy_data->console_size = ramoops_console_size;

dummy_data->ftrace_size = ramoops_ftrace_size;

dummy_data->pmsg_size = ramoops_pmsg_size;

dummy_data->dump_oops = dump_oops;

/*

* For backwards compatibility ramoops.ecc=1 means 16 bytes ECC

* (using 1 byte for ECC isn‘t much of use anyway).

*/

dummy_data->ecc_info.ecc_size = ramoops_ecc == 1 ? 16 : ramoops_ecc;

dummy = platform_device_register_data(NULL, "ramoops", -1,

dummy_data, sizeof(struct ramoops_platform_data));

有几个关键的可配置参数,定义在 ramoops_platform_data 结构中:

/*

* Ramoops platform data

* @mem_size memory size for ramoops

* @mem_address physical memory address to contain ramoops

*/

struct ramoops_platform_data {

unsigned long mem_size;

phys_addr_t mem_address;

unsigned int mem_type;

unsigned long record_size;

unsigned long console_size;

unsigned long ftrace_size;

unsigned long pmsg_size;

int dump_oops;

struct persistent_ram_ecc_info ecc_info;

};

mem_size:分配给ramoops的总内存大小。mem_address:ramoops使用的物理内存起始地址。mem_type:内存类型(可选)。record_size:每个oops/panic记录的大小。console_size:控制台日志区域的大小。ftrace_size:ftrace日志区域的大小。pmsg_size:pmsg(用户空间消息)区域的大小。dump_oops:是否在oops时也进行转储的标志。ecc_info:RAM的ECC(纠错码)配置信息。

另一个重要的结构体 ramoops_context 表示了ramoops的运行上下文:

struct ramoops_context {

struct persistent_ram_zone **przs;

struct persistent_ram_zone *cprz;

struct persistent_ram_zone *fprz;

struct persistent_ram_zone *mprz;

phys_addr_t phys_addr;

unsigned long size;

unsigned int memtype;

size_t record_size;

size_t console_size;

size_t ftrace_size;

size_t pmsg_size;

int dump_oops;

struct persistent_ram_ecc_info ecc_info;

unsigned int max_dump_cnt;

unsigned int dump_write_cnt;

/* _read_cnt need clear on ramoops_pstore_open */

unsigned int dump_read_cnt;

unsigned int console_read_cnt;

unsigned int ftrace_read_cnt;

unsigned int pmsg_read_cnt;

struct pstore_info pstore;

};

在 ramoops_probe 时,会把平台数据(platform data)的成员赋值给上下文对应的字段。为了理解内存布局,继续看probe过程:

paddr = cxt->phys_addr;

dump_mem_sz = cxt->size - cxt->console_size - cxt->ftrace_size

- cxt->pmsg_size;

err = ramoops_init_przs(dev, cxt, &paddr, dump_mem_sz);

if (err)

goto fail_out;

err = ramoops_init_prz(dev, cxt, &cxt->cprz, &paddr,

cxt->console_size, 0);

if (err)

goto fail_init_cprz;

err = ramoops_init_prz(dev, cxt, &cxt->fprz, &paddr, cxt->ftrace_size,

LINUX_VERSION_CODE);

if (err)

goto fail_init_fprz;

err = ramoops_init_prz(dev, cxt, &cxt->mprz, &paddr, cxt->pmsg_size, 0);

if (err)

goto fail_init_mprz;

cxt->pstore.data = cxt;

可以看到,ramoops会逐个初始化不同的持久化RAM区域(persistent_ram_zone)。总内存(size)被划分为四个部分,它们的关系是:

dump_mem_sz + cxt->console_size + cxt->ftrace_size + cxt->pmsg_size = cxt->size

record_size 和 dump_mem_sz 的关系在 ramoops_init_przs 函数中体现:

static int ramoops_init_przs(struct device *dev, struct ramoops_context *cxt,

phys_addr_t *paddr, size_t dump_mem_sz)

{

int err = -ENOMEM;

int i;

if (!cxt->record_size)

return 0;

if (*paddr + dump_mem_sz - cxt->phys_addr > cxt->size) {

dev_err(dev, "no room for dumps\n");

return -ENOMEM;

}

cxt->max_dump_cnt = dump_mem_sz / cxt->record_size;

if (!cxt->max_dump_cnt)

return -ENOMEM;

这段代码表明,大小为 dump_mem_sz 的oops/panic区域,会被分成 max_dump_cnt 个记录,每个记录的大小是 record_size。接着,它会调用 persistent_ram_new 来为这个RAM区域分配和管理内存:

for (i = 0; i < cxt->max_dump_cnt; i++) {

cxt->przs[i] = persistent_ram_new(*paddr, cxt->record_size, 0,

&cxt->ecc_info,

cxt->memtype, 0);

控制台(console)、ftrace和pmsg的RAM区域也以类似方式分配。

最后,根据配置设置标志位并注册到pstore核心:

cxt->pstore.flags = PSTORE_FLAGS_DMESG; //tj: 默认dump oops/panic

if (cxt->console_size)

cxt->pstore.flags |= PSTORE_FLAGS_CONSOLE;

if (cxt->ftrace_size)

cxt->pstore.flags |= PSTORE_FLAGS_FTRACE;

if (cxt->pmsg_size)

cxt->pstore.flags |= PSTORE_FLAGS_PMSG;

err = pstore_register(&cxt->pstore);

if (err) {

pr_err("registering with pstore failed\n");

goto fail_buf;

}

ramoops定义的pstore回调函数通过全局的 psinfo 结构体提供:

static struct ramoops_context oops_cxt = {

.pstore = {

.owner = THIS_MODULE,

.name = "ramoops",

.open = ramoops_pstore_open,

.read = ramoops_pstore_read, // psi->read

.write_buf = ramoops_pstore_write_buf, //for non pmsg

.write_buf_user = ramoops_pstore_write_buf_user, //for pmsg

.erase = ramoops_pstore_erase,

},

};

pstore使用方法

ramoops

配置内核

需要在Linux内核中启用以下配置选项:

CONFIG_PSTORE=y

CONFIG_PSTORE_CONSOLE=y

CONFIG_PSTORE_PMSG=y

CONFIG_PSTORE_RAM=y

CONFIG_PANIC_TIMEOUT=-1

注意:由于日志数据存放在DDR(内存)中,断电后会丢失,所以只能依靠系统自动重启后来查看。因此需要配置 CONFIG_PANIC_TIMEOUT(设置为-1表示立即重启,或一个正数秒数),让系统在panic后能够自动重启。

设备树(DTS)配置

可以通过设备树来预留内存并配置ramoops参数:

reserved-memory {

#address-cells = <2>;

#size-cells = <2>;

ranges;

ramoops_mem: ramoops_mem@110000 {

reg = <0x0 0x110000 0x0 0xf0000>;

reg-names = "ramoops_mem";

};

};

ramoops {

compatible = "ramoops";

record-size = <0x0 0x20000>;

console-size = <0x0 0x80000>;

ftrace-size = <0x0 0x00000>;

pmsg-size = <0x0 0x50000>;

memory-region = <&ramoops_mem>;

};

mtdoops (MTD后端)

内核配置

CONFIG_PSTORE=y

CONFIG_PSTORE_CONSOLE=y

CONFIG_PSTORE_PMSG=y

CONFIG_MTD_OOPS=y

CONFIG_MAGIC_SYSRQ=y

分区配置

可以通过内核命令行(cmdline)或设备树来指定MTD分区。

cmdline方式:

bootargs = "console=ttyS1,115200 loglevel=8 rootwait root=/dev/mtdblock5 rootfstype=squashfs mtdoops.mtddev=pstore";

blkparts = "mtdparts=spi0.0:64k(spl)ro,256k(uboot)ro,64k(dtb)ro,128k(pstore),3m(kernel)ro,4m(rootfs)ro,-(data)";

设备树(part of)方式:

bootargs = "console=ttyS1,115200 loglevel=8 rootwait root=/dev/mtdblock5 rootfstype=squashfs mtdoops.mtddev=pstore";

partition@60000 {

label = "pstore";

reg = <0x60000 0x20000>;

};

blkoops (块设备后端)

配置内核

CONFIG_PSTORE=y

CONFIG_PSTORE_CONSOLE=y

CONFIG_PSTORE_PMSG=y

CONFIG_PSTORE_BLK=y

CONFIG_MTD_PSTORE=y

CONFIG_MAGIC_SYSRQ=y

配置分区

同样支持cmdline和设备树方式。

cmdline方式:

bootargs = "console=ttyS1,115200 loglevel=8 rootwait root=/dev/mtdblock5 rootfstype=squashfs pstore_blk.blkdev=pstore";

blkparts = "mtdparts=spi0.0:64k(spl)ro,256k(uboot)ro,64k(dtb)ro,128k(pstore),3m(kernel)ro,4m(rootfs)ro,-(data)";

设备树(part of)方式:

bootargs = "console=ttyS1,115200 loglevel=8 rootwait root=/dev/mtdblock5 rootfstype=squashfs pstore_blk.blkdev=pstore";

partition@60000 {

label = "pstore";

reg = <0x60000 0x20000>;

};

pstore 文件系统操作

挂载pstore文件系统:

mount -t pstore pstore /sys/fs/pstore

挂载后,通过 mount 命令能看到类似这样的信息:

# mount

pstore on /sys/fs/pstore type pstore (rw,relatime)

如果需要手动触发内核崩溃来测试pstore功能,可以使用SysRq键(需要内核支持 CONFIG_MAGIC_SYSRQ):

# echo c > /proc/sysrq-trigger

不同后端配置方式下,生成的日志文件名称略有不同:

ramoops:

# mount -t pstore pstore /sys/fs/pstore/

# cd /sys/fs/pstore/

# ls

console-ramoops-0 dmesg-ramoops-0 dmesg-ramoops-1

mtdoops:

对于MTD后端,数据直接写在MTD分区上。可以通过读取MTD设备节点来获取原始数据:

# cat /dev/mtd3 > 1.txt

# cat 1.txt

blkoops:

cd /sys/fs/pstore/

ls

dmesg-pstore_blk-0 dmesg-pstore_blk-1

总结

pstore 初始化流程:

ramoops_init

ramoops_register_dummy

ramoops_probe

ramoops_register

pstore 数据保存流程(panic时):

register a pstore_dumper

// when panic happens, kmsg_dump is called

call dumper->dump

pstore_dump

pstore 数据读取流程(挂载文件系统时):

ramoops_probe

persistent_ram_post_init

pstore_register

pstore_get_records

ramoops_pstore_read

pstore_decompress (only for dmesg)

pstore_mkfile (save to files)

pstore机制作为Linux内核崩溃调试的重要工具,其模块化设计提供了很大的灵活性。无论是使用RAM、MTD还是块设备作为后端,开发者都可以根据实际硬件资源和需求进行配置,从而在系统发生致命错误时捕获关键的日志信息,这对于嵌入式系统和服务器的事后调试分析至关重要。想了解更多系统调试和底层原理,可以到云栈社区的技术板块交流探讨。

本文参考

https://heapdump.cn/article/1961461

https://blog.csdn.net/u013836909/article/details/129894795

https://zhuanlan.zhihu.com/p/545560128

https://docs.kernel.org/admin-guide/pstore-blk.html